FakeFinder

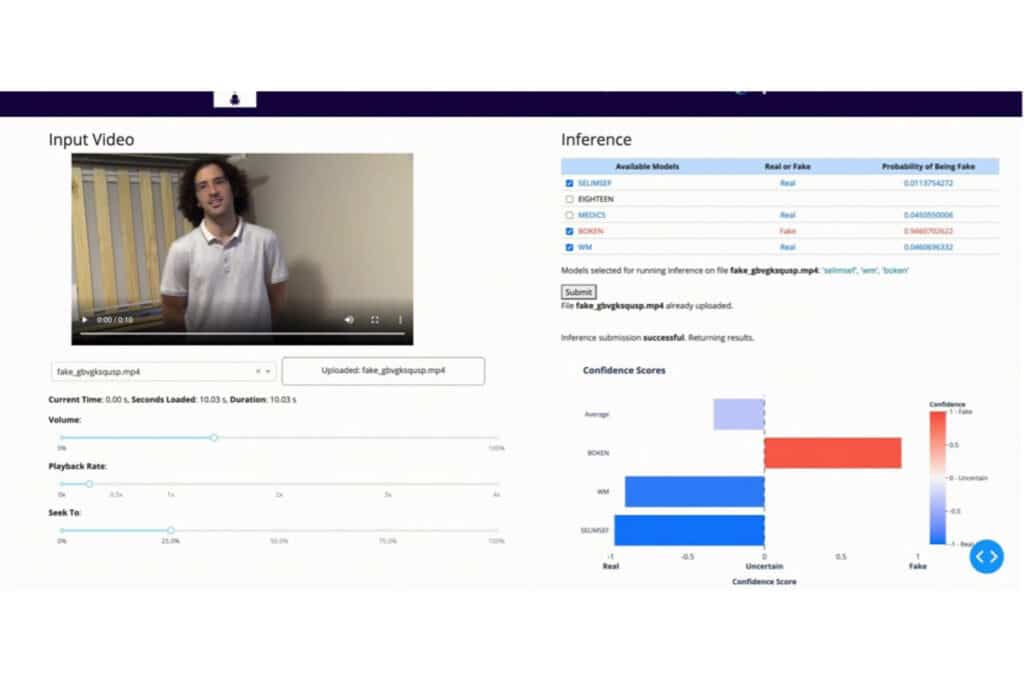

Increasingly fake videos are popping up around us because DeepFake generation models are getting better at producing realistic output at an alarming scale. FakeFinder was a hands-on project aimed at better understanding Open Source models that can be effective for debunking such videos at a similar rate.

AI Assurance Auditing

IQT Labs is developing a pragmatic, multi-disciplinary approach to auditing AI & ML tools. Our goal is to help people understand the limitations of AI/ML and identify risks before these tools are deployed in high stakes situations. We believe auditing can help diverse stakeholders build trust in emerging technologies…when that trust is warranted. For more info, check out this report which describes our auditing approach and what we found when we audited FakeFinder an Open Source deepfake detection tool.

IQT Explains: The Importance of Bias Testing in AI Assurance

An IQT Podcast episode exploring how we test and assess AI technology to minimize unwanted biases and consider legalities and ethics. Listen in if you want to discover how AI technology is being evaluated from a legal and ethical stance.

IQT Explains: AI Assurance

What happened when we audited a deepfake detection tool called FakeFinder? Our AI Assurance series continues with a podcast detailing findings from our audit on this Open Source tool that predicts whether a video is a deepfake.

AI Assurance: Do deepfakes discriminate?

Do deepfakes discriminate? We explore this concept in the fifth blog post of our AI Assurance series, discussing the bias portion of our audit of the deepfake detection tool, Fakefinder.

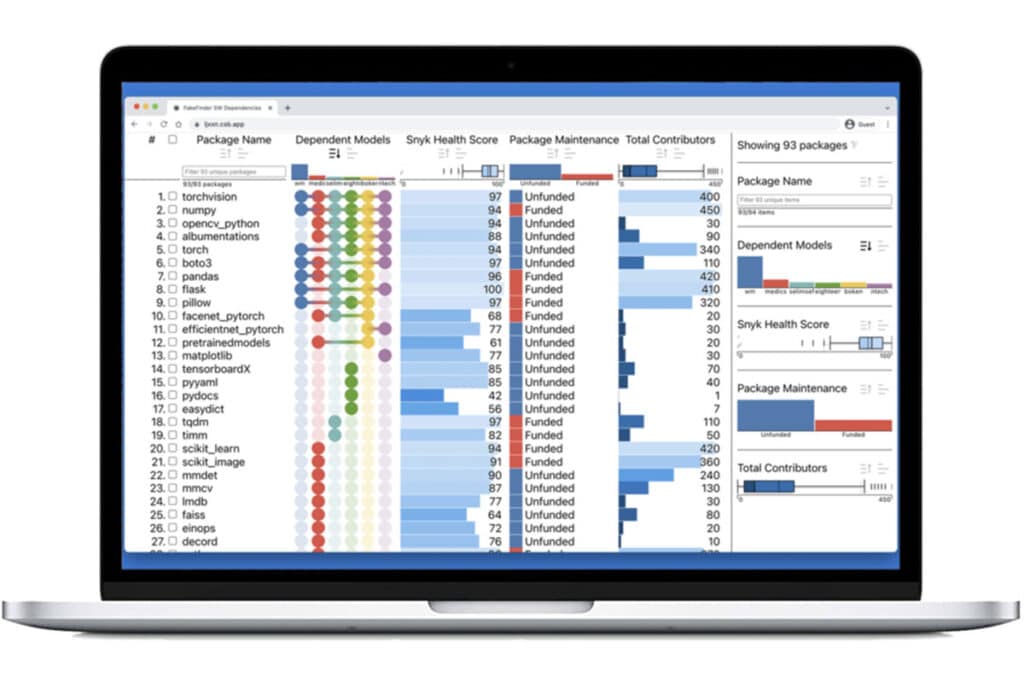

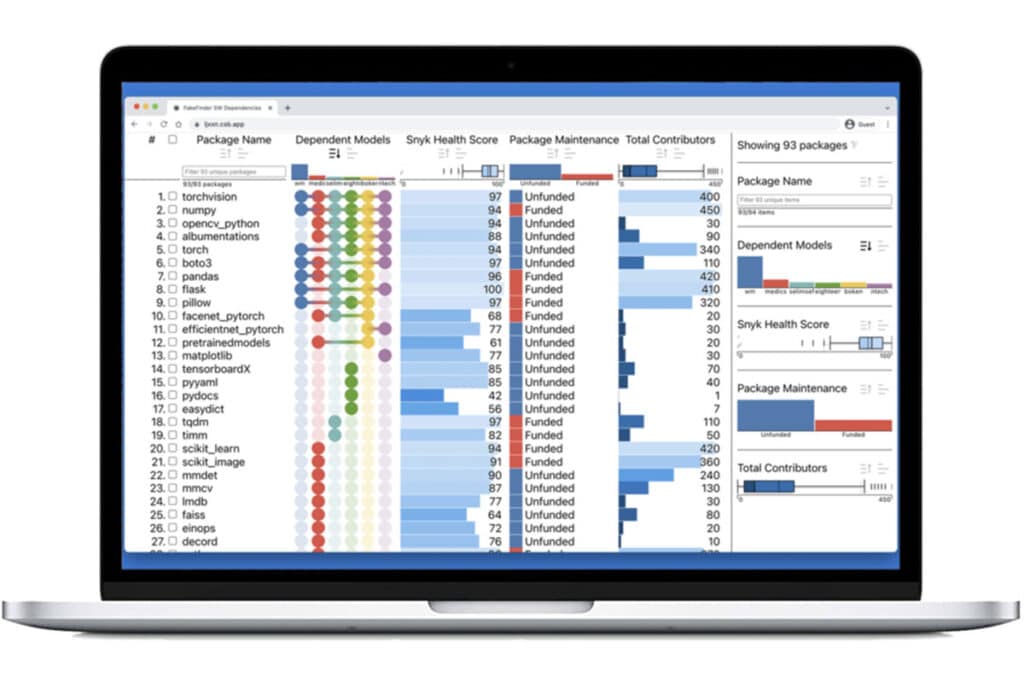

Open Source Software Nutrition Labels: Scaling Up with Anaconda Python

Our first blog in this series introduced our Nutrition Label concept, focused on providing opensource software transparency. This final post in the series explores how we scaled up this Open Source Software “Nutrition Label” prototype to analyze the Anaconda Linux/Python 3.9 ecosystem.

AI Assurance: An “ethical matrix” for FakeFinder

With so many ways that AI can fail, how do we know when an AI tool is “ready” and “safe” for deployment? Our fourth blog post in our AI Assurance series explores the ethics portion of our audit on Fakefinder, a deepfake detection tool.

Open Source Software Nutrition Labels: An AI Assurance Application

Since software supply chains are often opaque and complex, we recently explored the concept of an Open Source Software “Nutrition Label” as part of our AI Assurance work.

AI Assurance: A deep dive into the cybersecurity portion of our FakeFinder audit

What happened when we dove into the cybersecurity portion of our FakeFinder audit? Read on to find out.

IQT Labs Presents FakeFinder, an Open Source Tool to Help Detect Deepfakes

How do you know if a video clip is representative of reality, or the person you’re talking to on the other side of a video call is who they appear to be? IQT Labs built the FakeFinder framework to highlight some of the top performing deepfake detection algorithms developed in the Open Source.